Unicode Follies

Internationalizalizing programs is hard. Programmers are naturally more familiar with their native languages. Especially in the United States, they may not know any other languages. And it goes without saying that the stuff that you don't understand is the stuff you can't test. So programmers naturally welcome anything that makes internationalization easier. One of those things is the Unicode standard. As the name implies, Unicode is intended to be a universal standard for representing text in any of the world's many languages. The Unicode Consotrium has an impressive list of members: Adobe, Apple, Google, IBM, Microsoft, and so forth. It has also been impressively successful: all the major operating systems, including Windows and Mac OS X, use Unicode internally. Unicode has a lot of advantages. However, this wouldn't be a very good rant if I didn't focus on the things that could be improved. So, as you might have guessed, that's exactly what I'm going to do today.The standard that wasn't

One of my biggest gripes against Unicode is that it doesn't specify a canonical way of serializing text. In my naivety, I expected that a standard for representing text would tell me how to, um, represent text. But Unicode doesn't quite do that. Because when you have only one way to do things, that's like, the man, keeping you down. Totally uncool. Instead, Unicode gave us lots and lots of different ways of serializing text. We have UTF-8, UTF-16 (big endian), UTF-16 (little endian), UTF-32 (big endian), UTF-32 (little endian), UCS-2, and even more obscure encodings. All of these encodings are simply ways of representing code points. Now what is a code point, you may ask? Is it a character? Well, not quite. Characters are made up of one or more code points. So, for example, in unicode, "Ä" (an A with two dots over it) is made up of two unicode code points, U+0041 and U+0308. Now, the distinction between code points and characters makes sense from a programmer's point of view. There are things other than the letter A that can have two dots over them. Rather than giving them all their own code point, we can just combine U+0308, the dieresis code point, with whatever vowel we want. But here's what doesn't make sense: there are other ways to get the letter Ä besides U+0041, U+0308. You can also use the single code point U+00C4. So the simple operation of comparing two strings. which was basically a memcmp back in ASCII-land, is a complete clusterfsck in Unicode. First you have to figure out what encoding the strings are in-- UTF-8, UTF-16, etc. Then you have to normalize the sequences of code points so that they represent composable characters the same way. If you're taking user input, you probably have no idea whether it's normalized or not. So you'll probably have to normalize everything, just to be safe. In fact, there's not even a single normalization form for Unicode-- there's four. Did we really need four? This is another case where having multiple ways to do things is not better. Guys, if you are designing a standard, take a stand. Have one way of doing things and stick to it. Don't come up with a standard that just says "do what thou wilt shall be the law, and the whole of the law."

Someone set us up the BOM

It's pretty clear, even to a first year comp sci student, that having multiple encodings in the wild is going to cause problems. You're always going to be reading text from one source and interpreting it as something that it isn't. Avoiding such situations is kind of the point of standardization. That, and eating donuts while sitting around a big table. However, the Consortium had a solution for this problem too. That solution was BOMs, or "byte order marks." They were supposed to appear at the start of documents to identify the kind of encoding and byte order used within. Unlike with everything else, the Consortium actually took a stand on what they should look like-- two bytes, at the very start of the document. I know, pretty bold. Couldn't they have given us more choices, like "two bytes, except on alternate Tuesdays when it's an HTML tag encoded in EBDIC." But no, two bytes it was. The problem with this, of course, is that it's utterly unworkable in the real world. Especially on UNIX, the assumption is that files are flat streams of data in a standard format. So some people used the BOM and some didn't. And of course, older documents never had a BOM, because they had been created before the concept existed.64k should be enough for anyone

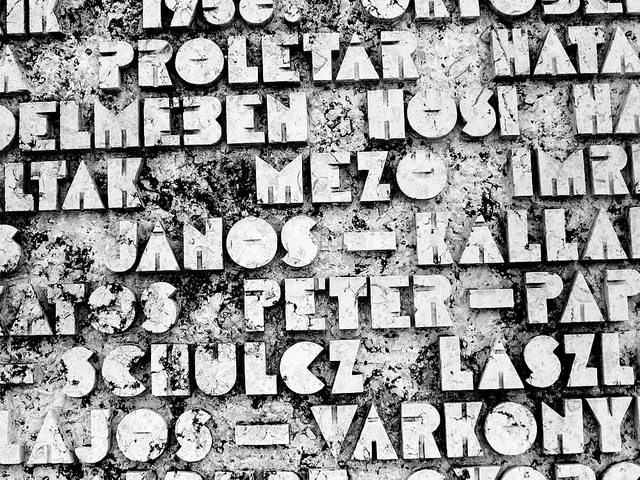

According to the unicode FAQ, the first version of Unicode was a 16-bit encoding. The idea was that every code point could be represented with 16 bits. 65535 code points should be enough for anyone, right? Well, no, actually. There are more than 65535 Chinese characters in existence. So even if all you wanted to support was Chinese and English, you would already need more than 16 bits. To be fair, the average Chinese person only needs to know a few thousand glyphs to be considered fluent. However, you really still want to be able to represent those characters so that you can, for example, store and view ancient documents without mangling them. However, the Consoritum didn't see it that way-- at least at first. Chinese has a lot of glyphs. So do Korean and Japanese. In order to stay within the self-imposed 16-bit limit, the Unicode Consortium decided to perform something called the "Han unification." Basically, the idea was that many glyphs from Chinese looked similar to glyphs in Japanese or Korean. So they were given the same code point. (Japan actually has multiple writing systems, which I'm glossing over here.) The problem with this is that although these characters may look similar, they're not the same! In the words of Suzanne Topping:Eventually, the 16-bit dream faded. The original 16-bit code point space is now referred to as the "basic multilingual plane," or BMP. There are 17 planes in total, the equivalent of a little bit more than 20 bits worth of space. And now, I can finally explain the difference between UCS-2 and UTF-16. Basically, both are two-byte encodings of Unicode, but UTF-16 is variable length, but UCS-2 is not. So UCS-2 is doomed to be unable to represent anything but the original 16-bit code point space (the BMP). UCS-2 is deprecated, but you may still find it kicking around in some places. It's now illegal to sell computers in China that do not conform to GB18030. This standard mandates support for code points outside of the original BMP. Han unification is still a sore point for many in Japan and Korea. Unicode adoption in Japan has been slow, partially because of this. The same font cannot correctly represent both Japanese and Chinese unicode text, because the same code points are used for glyphs that look different.For example, the traditional Chinese glyph for "grass" uses four strokes for the "grass" radical, whereas the simplified Chinese, Japanese, and Korean glyphs use three. But there is only one Unicode point for the grass character (U+8349) regardless of writing system. Another example is the ideograph for "one," which is different in Chinese, Japanese, and Korean. Many people think that the three versions should be encoded differently.

How it should have ended

It's easy to complain about things. It's harder to make constructive suggestions. With that in mind, here is what I think the Unicode Consortium should have done:- Only have one encoding-- UTF-8. UTF-8 is a variable-length encoding, so you never have to worry about running out of code points. It's also easy to upgrade existing C programs to UTF-8. They can continue to pass around "pointers to char." There is no flag day when everything needs to change all at once. Since it's a single-byte encoding, UTF-8 avoids all the religious wars over endianness. It also is extremely compact for ASCII text, which still comprises the majority of computer text out there.

- Represent every glyph with exactly one series of code points The Unicode normalization quagmire is annoying and pointless. Each valid series of code points should represent something unique, so that comparing strings becomes a single memory comparison again. Considering the lengths they went to to try to stuff everything in 16 bits, the Consortium gave remarkably little thought to the efficiency problems caused by denormalized forms. Obviously, there's also an interoperability risk here too.

- Don't try to "unify" glyphs that look different! Text should

look right in any font-- at least, assuming the font has support for

the code points you're using.

The Future

Unicode support has been improving everywhere. For C programmers, good libraries like IBM's ICU have been created to deal with Unicode. Higher-level langauges usually come with Unicode support built-in (although it's not always perfect.) People are also slowly converging on UTF-8 as a standard for interchanging data-- at least in the UNIX world. The hateful idea of BOMs has slowly faded into the mist, along with Ace of Base and Criss-Cross, two other scourges of the 1990s. At the end of the day, Unicode has made things better for everyone. But we should keep in mind the lessons learned here so that future standards bodies can do even better.Update

Apparently unicode now has a "pile of poo" character. Name: PILE OF POO

Name: PILE OF POOBlock: Miscellaneous Symbols And Pictographs

Category: Symbol, Other [So]

Index entries: POO, PILE OF

Comments: dog dirt

Version: Unicode 6.0.0 (October 2010)

HTML Entity: 💩 I guess we should all be happy that we are living in a bright future where even piles of poo are standardized and cross-platform.